Photo by Michał Parzuchowski on Unsplash

Has it been suggested that your child undergo a learning assessment? Are you unsure what a learning assessment involves or achieves? Your Mind Matters Psychology Services is here to help!

What are Learning Assessments?

Learning assessments, also referred to as educational or psychoeducational assessments, are assessments that explore an individual’s achievement in different areas of academia. It is a process of gathering information (in a standardised manner) to better understand an individual’s learning profile and factors that may be affecting their ability to learn. This information can then be used to inform how to help young people learn and develop their skills to their full potential.

When Should Learning Assessments be Completed?

There are many reasons why a learning assessment may be needed. Often, a teacher may recommend a learning assessment due to certain difficulties observed in a student’s academic performance. A paediatrician may refer a young person for a learning assessment because of behavioural concerns reported in the classroom. Parents/guardians may be querying why it is so difficult for their child to learn to read, write and/or complete maths problems. A young person may have concerns about their grades.

Ultimately, there is no ‘right’ time to undertake a learning assessment. However, the earlier difficulties can be identified, the earlier necessary supports can be put in place. Learning difficulties are associated with low self-esteem, and emotional and behavioural difficulties (Alesi, et al., 2014; Klassen et al., 2013). Therefore, earlier intervention can help mitigate these challenges and improve long-term outcomes for many individuals (Skues & Cunningham, 2011).

Why Undergo a Learning Assessment?

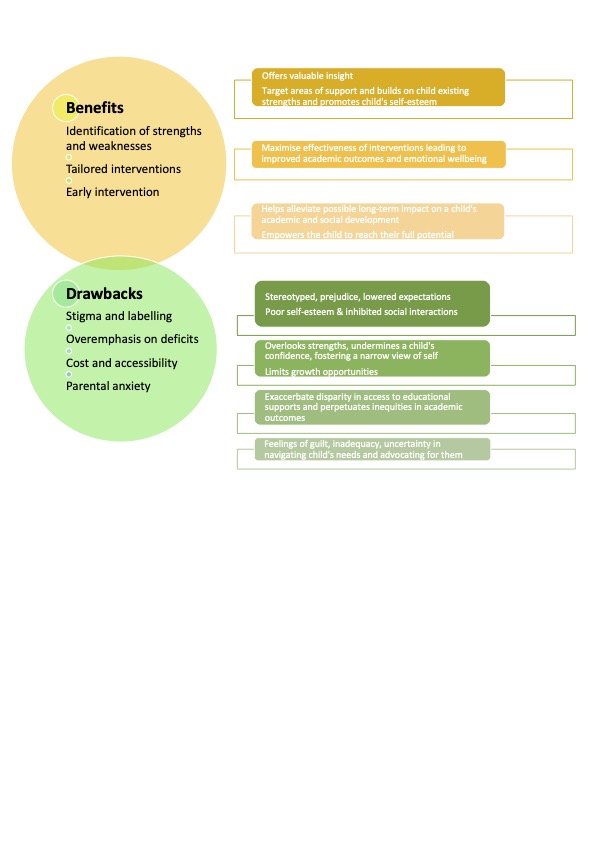

The benefits of completing a learning assessment can include:

- Obtaining a better understanding of a young person’s cognitive and academic strengths and weaknesses.

- Learning how to best support a young person’s learning through tailored strategies and recommendations.

- Determining whether a young person has a learning disability, such as a Specific Learning Disorder (SLD) with impairment in reading (dyslexia), written expression (dysgraphia) and/or mathematics (dyscalculia).

- Understanding if a young person is being academically challenged at school.

- Making informed decisions regarding a young person’s education, including school placement and applying for special considerations (e.g., extra time on exams).

- Supporting the development of a young person’s self-esteem and confidence by providing them with an opportunity to gain insight into their learning profile (and potential reasons behind their difficulties).

How are Learning Assessments Completed?

Learning assessments can vary depending on the individual, their needs and referral reason; however, the typical process includes:

- An initial intake interview with parents/guardians and the young person (if appropriate), where detailed information about the young person’s development and learning history is gathered by the psychologist.

- The assessing psychologist may also want to collect information from other professionals involved in the young person’s care such as teachers, doctors, school counsellors, speech pathologists, etc., as this can help provide an understanding of the young person’s functioning in different environments.

- A cognitive assessment, where the young person works individually with the psychologist to complete a range of tasks, including questions, puzzles, and memory activities. This assessment will provide information about how the young person thinks, solves problems, processes information and remembers.

- An academic assessment, where the young person works individually with the psychologist to complete a range of reading, writing, mathematics and oral language tasks.

- A written report that includes all of the assessment results, as well as recommendations for intervention and/or support.

- A feedback session, whereby the psychologist will explain the outcomes of the assessment to the parents/guardians and young person (if appropriate). This session also provides clients with the opportunity to ask the psychologist any questions about the results or steps moving forward.

In addition to the assessment of cognitive and academic abilities, learning assessments at Your Mind Matters Psychology Services can also include the exploration of other factors related to learning such as attention, motivation, affect, and behaviour.

What now?

If you have queries or concerns regarding your child’s learning, or if you would like further information regarding learning assessments (including availability), please contact Your Mind Matters Psychology Services at (03) 9802 4653. Our team of psychologists are passionate about uncovering young people’s learning potential and discovering ways to help them achieve it!

References

Alesi, M., Rappo, G., & Pepi, A. (2014). Depression, anxiety at school and self-esteem in children with learning disabilities. Journal of psychological abnormalities, 1-8. http://dx.doi.org/10.4172/2329-9525.1000125

Klassen, R., Tze, V., & Hannok, W. (2013). Internalizing Problems of Adults With Learning Disabilities. Journal of Learning Disabilities, 46(4), 317–327. https://doi.org/10.1177/0022219411422260

Skues, J., & Cunningham, E. (2011). A contemporary review of the definition, prevalence, identification and support of learning disabilities in Australian schools. Australian Journal of Learning Difficulties, 16(2), 159–180. https://doi.org/10.1080/19404158.2011.605154